January 15, 2016

Since October 2015 I have been fighting with an application programming interface (API) that works with an enterprise resource planning (ERP) system. The API, with uses Microsoft .Net 4.0 or higher, is intended to simplify the process of automating the creation of various types of documents within the ERP system. For example, the API could be used to quickly create 1,000 new part numbers in the ERP system, using an Excel spreadsheet as the source data (a custom program would need to read the Excel spreadsheet and plug that information into the formatting syntax expected by the API). The API could also be used for creating work orders in the ERP system which would then be used to produce the parts, receive purchased parts into inventory, issue parts in inventory to work orders, receive completed parts into inventory, create shipping documents to send the parts to customers, generate invoice records to accept payment from customers, and a variety of other types of documents. I have been using Microsoft C# 2015 to interface with the API, and the ERP company helpfully includes a standalone executable (.EXE) that is able to test the API to make certain that all is working well with the API communication to the Oracle database through the Oracle Managed Data Provider through .Net. This API replaces an older API that exposed a COM interface, which I previously used extensively to automate the creation of various types of documents.

Why have I been fighting with the application programming interface for this ERP system? To err is human, but two errors does not compute. While trying to determine how to use the API, I copied a code sample from the API documentation into a new project in Microsoft C# 2015. The documentation indicates that the methods for using the API are similar to those that I used in the older API that exposed a COM interface, so it appeared that the process would be relatively easy transition to the new API. That was my thought, any way, until I attempted to run the program.

That cannot be good. I suppose that it is possible that the database schema has non-enabled constraints, as it had just gone through a conversion from an earlier version of the ERP system to the latest version a couple of days prior. Let’s check:

SELECT CONSTRAINT_NAME, CONSTRAINT_TYPE, TABLE_NAME, STATUS FROM DBA_CONSTRAINTS WHERE OWNER='SYSADM' AND STATUS<>'ENABLED'; no rows selected

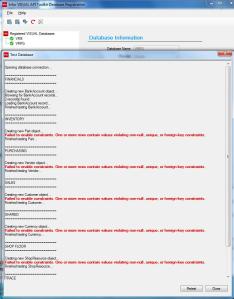

I guess that is not the cause of the error. Let’s take the code out of a try block, and run the program again (click the picture for a larger view):

A System.Data.ConstraintException in LsaCore.dll, Failed to enable constraints. One or more rows contain values violating non-null, unique, or foreign-key constraints error. Ouch, what did I do wrong? I was just trying to create a new part number in the database, and the exception was thrown before any part specifications were provided. Maybe it is because I am trying to develop on a 64 bit computer, or maybe there is some missing Windows registry entry that I still need to create?

Let’s try the standalone executable (.EXE) that the ERP provider distributes to test the API – on a 32 bit computer that was used for a test deployment of the new version of the ERP system. This computer was an otherwise fresh install of Windows 7 with all security updates installed prior to the deployment of the new version of the ERP system (click the picture for a larger view):

That “Failed to enable constraints. One or more rows contain values violating non-null, unique, or foreign-key constraints.” error message is a bit too familiar at this point. I need a 10046 trace at level 12 to see what might be triggering the constraints error. Logging into the database as the SYS user using SQL*Plus, I created an after logon trigger to enable a 10046 trace for my C# test program as well as the API test tool provided with the ERP system:

CREATE OR REPLACE TRIGGER LOGON_CAPTURE_10046 AFTER LOGON ON DATABASE DECLARE SHOULD_EXECUTE INTEGER; TRACEFILE VARCHAR2(150); BEGIN SELECT DECODE(SUBSTR(UPPER(PROGRAM),1,5),'VISUA',1,0)+DECODE(SUBSTR(UPPER(PROGRAM),1,5),'VMFGC',1,0) INTO SHOULD_EXECUTE FROM V$SESSION WHERE SID=(SELECT SID FROM V$MYSTAT WHERE ROWNUM=1); IF SHOULD_EXECUTE > 0 THEN TRACEFILE := 'ALTER SESSION SET TRACEFILE_IDENTIFIER = '''||USER||TO_CHAR(SYSDATE,'YYMMDDHH24MI')||''''; EXECUTE IMMEDIATE TRACEFILE; EXECUTE IMMEDIATE 'ALTER SESSION SET MAX_DUMP_FILE_SIZE=UNLIMITED'; EXECUTE IMMEDIATE 'ALTER SESSION SET TIMED_STATISTICS=TRUE'; EXECUTE IMMEDIATE 'ALTER SESSION SET EVENTS ''10046 TRACE NAME CONTEXT FOREVER, LEVEL 12'''; END IF; END; /

Repeating the test with my C# test program, I found that a few 10046 trace files were created on the database server each time I ran the C# test program. One of the 10046 trace files included:

PARSE ERROR #425887032:len=42 dep=0 uid=159 oct=3 lid=159 tim=2402951856734 err=904 SELECT DB_VERSION FROM APPLICATION_GLOBAL WAIT #425887032: nam='SQL*Net break/reset to client' ela= 2 driver id=1413697536 break?=1 p3=0 obj#=-1 tim=2402951856800 WAIT #425887032: nam='SQL*Net break/reset to client' ela= 559 driver id=1413697536 break?=0 p3=0 obj#=-1 tim=2402951857369 WAIT #425887032: nam='SQL*Net message to client' ela= 1 driver id=1413697536 #bytes=1 p3=0 obj#=-1 tim=2402951857387

That is interesting. There is no column named DB_VERSION in the APPLICATION_GLOBAL table, but there is a column named DBVERSION. Maybe that is the cause of the Failed to enable constraints error? After double-checking the table definition, the column is in fact supposed to be named DBVERSION, not DB_VERSION, so maybe the programmer that created the API DLLs made a typo?

Taking a look at another trace file, I found another potential problem:

PARSE ERROR #415492776:len=45 dep=0 uid=159 oct=3 lid=159 tim=2402951781868 err=942 SELECT DB_VERSION FROM VQ_APPLICATION_GLOBAL WAIT #415492776: nam='SQL*Net break/reset to client' ela= 2 driver id=1413697536 break?=1 p3=0 obj#=-1 tim=2402951781960 WAIT #415492776: nam='SQL*Net break/reset to client' ela= 4892 driver id=1413697536 break?=0 p3=0 obj#=-1 tim=2402951786862 WAIT #415492776: nam='SQL*Net message to client' ela= 0 driver id=1413697536 #bytes=1 p3=0 obj#=-1 tim=2402951786911

There is no table in the database named VQ_APPLICATION_GLOBAL, and per the table creation script for the ERP system, that table probably should not exist. Maybe the programmer that created the API DLLs made another typo? Creating a virtual DB_VERSION column to see if there is any improvement:

ALTER TABLE APPLICATION_GLOBAL ADD (DB_VERSION NVARCHAR2(10) GENERATED ALWAYS AS (TRIM(DBVERSION)));

Well, that took care of the Failed to enable constraints error, but now I have a new problem when I run the C# test program:

What does “Dataspace name VMFG not found” mean? Is that an improvement over the Failed to enable constraints error? Let’s give the standalone executable (.EXE) that the ERP provider distributes to test the API a go on the 32 bit computer (click the picture for a larger view):

“Dataspace name VMFG not found” – at least there is some level of consistency.

Switching back to the C# test program, I decided to enable a bit more of the options in the Exception Settings window (click the picture for a larger view):

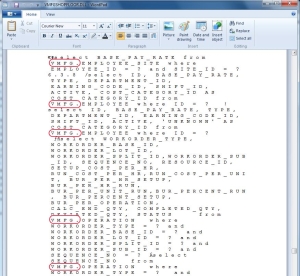

So, the “Dataspace name VMFG not found” error was thrown in the LsaDataLogic.TableDefinition.LoadTableDefinitions procedure in LsaCore. That certainly is specific. But, where is “VMFG” coming from, that is not the name of the database that I am trying to work with, although that is the name of a database that is frequently configured for this ERP system. For fun I opened one of the API DLL files using Wordpad (click the picture for a larger view):

I see VMFG. prefixing what appears to be every table that is in a SELECT type SQL statement within the DLL. After checking with one of the most experienced ERP support people at the ERP company, the “Dataspace name VMFG not found” error was a complete mystery. No one in support had ever seen that particular error message. The “Failed to enable constraints. One or more rows contain values violating non-null, unique, or foreign-key constraints.” error that I saw earlier was also a complete mystery at that line in the C# test code.

Taking another look at the multiple 10046 trace files that were created before I tried creating the virtual DB_VERSION column, I found that the API was actually sending at least four invalid SQL statements to the database:

SELECT DB_VERSION FROM APPLICATION_GLOBAL SELECT DB_VERSION FROM VQ_APPLICATION_GLOBAL SELECT DBVERSION , SITE_ID , COMPANY_MANAGER FROM APPLICATION_GLOBAL SELECT APP_VERSION , PAY_HELP , HR_HELP FROM APPLICATION

The API was also using two different techniques to identify the name of the primary key column for a table – that table does not, and should not have a primary key per the table creation script for the ERP system:

SELECT COLS.COLUMN_NAME AS COLNAME , COLS.POSITION AS PKCOLSEQNUM FROM ALL_CONSTRAINTS CONS , ALL_CONS_COLUMNS COLS WHERE COLS.TABLE_NAME = 'APPLICATION_GLOBAL' AND CONS.CONSTRAINT_TYPE = 'P' AND CONS.CONSTRAINT_NAME = COLS.CONSTRAINT_NAME AND CONS.OWNER = COLS.OWNER ORDER BY COLS.TABLE_NAME , COLS.POSITION SELECT I.INDEX_NAME AS INDEX_NAME FROM USER_INDEXES I WHERE I.TABLE_NAME = 'APPLICATION_GLOBAL' AND I.TABLE_OWNER = 'SYSADM' AND I.UNIQUENESS = 'UNIQUE' ORDER BY I.INDEX_NAME

Wow! I asked the experienced ERP support person if any of the above makes sense. I am not sure if I ever received a response to that question.

So, I enabled tracing for the Oracle Managed Data Provider for .Net driver used by the API with a modification to the MACHINE.CONFIG file found in the folder C:\Windows\Microsoft.NET\Framework\v4.0.30319\Config. Just before the end of the configSections section of the file (about ½ way through the file – just before the line </configSections>) I added the following line:

<section name="oracle.manageddataaccess.client" type="OracleInternal.Common.ODPMSectionHandler, Oracle.ManagedDataAccess" />

Next, just before the </configuration> line at the end of the file I added the following lines (specify the correct location of the tnsname.ora file on the tns_admin line):

<oracle.manageddataaccess.client> <version number="*"> <settings> <setting name="tns_admin" value="C:\Oracle\product\11.2.0\client_1\network\admin\" /> <setting name="TraceOption" value="1" /> <setting name="TraceLevel" value="127" /> <setting name="TraceFileLocation" value="C:\Trace\" /> </settings> </version> </oracle.manageddataaccess.client>

I then created a folder named Trace in the root of the C: drive (note that if UAC (User Access Control) is enabled, it may be necessary to grant everyone full permissions to that folder if it is located in the root of the C: drive). The generated trace files initially did not seem to provide any additional insight into the problem beyond what was found in the 10046 trace files.

Earlier this week the experienced ERP support person arranged for an cross-continent video conference call with several other people at the ERP company to help identify the source of the problems that I experienced with the API for the ERP system. That video conference call lasted a bit over two and a half hours, with no solution, but the conference call included a demonstration that the API for the ERP system does work with the sample database that is used by the ERP company. The support person even went so far as to record and send to me a Process Monitor trace of the successful test execution, and he also enabled a 10046 trace of the successful test execution. We only spent a couple of minutes scanning through the 10046 trace files during the call. Oddly enough, during the successful test execution, I noticed that the following two SQL statements appeared in his 10046 trace files with parse errors:

SELECT DB_VERSION FROM APPLICATION_GLOBAL SELECT DB_VERSION FROM VQ_APPLICATION_GLOBAL

Interesting, and when I pointed out those two parse errors (again, along with the other two that I mentioned earlier) to the people on the video conference call, no one knew why those SQL statements were attempted to be executed. Along with the Process Monitor trace, the senior support person sent a copy of their sample database to me, exported using Datapump, so that I could try to understand just what was corrupt on the essentially barebones Windows 7 32 bit computer.

After finding that the ERP company’s sample database worked fine with my essentially barebones Windows 7 32 bit computer, I made a somewhat important conclusion. The “Dataspace name VMFG not found” error is bad news, essentially it means that the API does not think that it is accessing a Visual Manufacturing database. The DB_VERSION queries against the APPLICATION_GLOBAL table and VQ_APPLICATION_GLOBAL are apparently supposed to fail! But why could someone on that 2.5+ hour cross-continent video conference call not come to the same conclusion? I know that two of the people on the conference call have been heavily involved with the ERP system development for well over 15 years, so the people are undoubtedly very knowledgeable about the subject.

After dropping the virtual column that I created, I then repeated the C# test program’s tests with the two databases. Comparing the trace files for my company’s database and the ERP company’s sample database, I found a difference (see the picture below – click for a larger view).

Ah, I found a difference. With the sample database, after the API’s DLL performed an OracleDataReader.Read() call, it executed an OracleDataReader.NextResult() call. With my company’s database, after the OracleDataReader.Read() call, the API’s DLL performed an OracleDataReader.Fill() call followed by an OracleDataReader.Close() call. Buy why? Here is the SQL statement that appeared in the trace file just prior to the highlighted line:

SELECT ACTIVATION_KEY , ACTIVITY_UDF_LABELS , ALLOW_EMAIL_DOC , AUDIT_REPORT_TIMES , BARCODE_MULTI_JOB , COMPANY_ADDR_1 , COMPANY_ADDR_2 , COMPANY_ADDR_3 , COMPANY_CITY , COMPANY_COUNTRY , COMPANY_NAME , COMPANY_STATE , COMPANY_ZIPCODE , CONFIGURATION_KEY , CUST_PRICE_EFF_REQ , CUST_UDF_LABELS , DBVERSION , DEF_OLDEST_INV_TYPE , DOC_UDF_LABELS , DOCUMENT_DIRECTORY , DRAWING_FILE_PATH , DRAWING_LOCATOR , DRAWING_VIEWER , EURO_CURRENCY_ID , FILTER_AVAIL_TRACE , ID , INSTALL_DATE , LAST_CONVERT_DATE , MAXIMUM_USERS , MFG_INTERFACE_USED , PART_UDF_LABELS , PLM_ENABLED , PLM_URL , PROGRAM_PATCH_LVL , PROJ_UDF_LABELS , PURC_QUOTE_TYPE , SD_SUBGROUP_MIN , SERIAL_NO , SESSION_TIMELIMIT , SHOP_UDF_LABELS , SQLBASE_DATABASE_VERSION , SSO_ENABLED , STORE_MACROS_IN_DB , TABLE_PATCH_LVL , TRIGGER_PATCH_LVL , VALIDATE_LOOKUPS , VEND_UDF_LABELS , VISUAL_USER_GROUP , VQ_DBNAME , VQ_DIRECTORY , VQ_ENABLED , VQ_QUERY_USE , VR_UPDATES , WFL_CMNT_PWD_REQ , WO_PRIORITY_PATH , XBAR_SUBGROUP_MIN FROM APPLICATION_GLOBAL APPLICATION_GLOBAL ORDER BY ID

The Oracle Managed Data Provider’s OracleCommand object for that SQL statement, when I executed it in another C# test program, indicated that the expected row length is 10,238 bytes. A problem? After trying a couple of changes in my database’s APPLICATION_GLOBAL table, I noticed that the PART_UDF_LABELS, VEND_UDF_LABELS, and CUST_UDF_LABELS columns in the ERP company’s sample database were all set to NULL. Below is the contents of the PART_UDF_LABELS column in my company’s APPLICATION_GLOBAL table:

"% of Plate","Heat Code/Material","Customer ID","Gross Weight","Vendor","Price Each","Surcharge","Freight","EAU (Cpy to Planning Tab)","FAB/COMB"

What, a difference between a sample database and a production database (one with 20+ years of transaction history)? 🙂

I set the PART_UDF_LABELS, VEND_UDF_LABELS, and CUST_UDF_LABELS columns to all be NULL, as they are in the sample database. All tests in API test program were successful with my company’s database with all three of those columns set to NULL. For sake of completeness, I then copied only the original PART_UDF_LABELS column value from my company’s database to the sample database, and managed to cause the API test program to fail with the sample database. Interesting…

I reported my findings to the senior support person at the ERP company. While waiting for a follow up from him (he needs to be able to reproduce my results), I performed some additional testing. The PART_UDF_LABELS, VEND_UDF_LABELS, and CUST_UDF_LABELS columns, as well as several other columns in that table, are defined as NVARCHAR2(250) data types, allowing up to 250 characters to be stored per row. The PLM_URL column in that table is defined as NVARCHAR2(2000), allowing up to 2,000 characters to be stored per row. I found that by putting the following value into the PART_UDF_LABELS column, the ERP company’s API test program would complete successfully:

"% of Plate","Heat Code/Material","Customer ID","Gross Weight"

Adding back a few more characters to that column value caused the ERP company’s API test program to fail the tests:

"% of Plate","Heat Code/Material","Customer ID","Gross Weight","Vendor"

I found that if the PART_UDF_LABELS, VEND_UDF_LABELS, and CUST_UDF_LABELS columns contained up to 64 characters, the ERP company’s API test program completed the tests successfully. 65 characters caused the API test program to fail the tests. But, what about that column (PLM_URL) that permits up to 2,000 characters to be stored, surely that column cannot have a 64 character limit for companies that need to automate document creation through the ERP company’s API? Yep, more than 64 characters in that column will also cause the API tests to fail.

To err is human, but two errors does not compute (or was it three errors identified in this article).

Leave a comment